Owing to the width of most of the many data tables on this site, it is best viewed from a desktop computer. If you are on a mobile device (phone or tablet), you will obtain a better viewing experience by rotating your device a quarter-turn (to get the so-called “panorama” screen view).

Quick page jumps:

This is an abbreviated form of the discussion; if you want to wade through the whole thing, with lots of numbers, we refer you to our 2009 essay "Precisely Inaccurate" as published on The Baseball Analysts forum.

It is by now well known that the 30 major-league baseball parks are, in effect, 30 fun-house mirrors, each reflecting player performance with a different set of distortions: some minor, some—too many—grotesque. Ideally, the first step one would apply in baseball analysis is to normalize out those distortions, to make the data commensurate and “park-neutral”.

Such normalization is not the trivial task too many “stat analysis” books and web sites seem to believe it is, with their “park factors” calculated in embarrassingly naive form from simple “Home/Road” split data. There are several crucial questions involved; perhaps the threshold one is What compared to what?

The only rational basis for truly neutral comparison of men and teams playing baseball in different parks is what they would have achieved had they played all their games in a single imaginary park that exactly averages the idiosyncracies of all 30 real parks (or, prior to inter-league play, all the parks in a team’s league).

(Inter-league play is “imbecilic” for a couple of reasons. First, it is ridiculous—stronger words come to mind—for a team to play games against teams with whom they are not in competition for anything: no NL team can win an AL Divisional title, and vice-versa. And yes, that also includes inter-divisional games. All this rubbish is because the bean counters who have long run MLB want virtually every team to be considered, at least by the fans who pay the toll, “competitive”; any day now, they’ll discover the idea of 30 Divisions—so every team is a “Division winner” without having to experience the wear and tear of actually playing a game—and have a 181-game “post-season”.) It is also ridiculous because of the disaster it wreaks on scheduling. ’Nuff said.)

Now the usual way of compiling “park factors” is to try to compare home and road data. The standard approach is to use the combined team-and-opponents data for home games versus those for away games. To take a simple example, to get a park factor for runs, one would take the sum of the runs scored at Team X’s home park by both it and its opponents, then divide by home games played (so as to get a per-game value, in case—as is now, sad to say, necessary—games at home and games away are not equal); one would then do the same for away data: sum the runs scored on the road by Team X and its opponents and divide by away games played; finally, one would take a ratio of the two. To continue the example, if the home total were 725 runs over 80 games and the away total were 675 runs over 80 games (giving per-games values of 9.063 at home and 8.438 on the road), one might conclude that Team X’s home park increases run scoring by 7.4%—that is, 9.063/8.438 = 1.074. (That is, to pick one, exactly the method used by ESPN to this hour). While other sources somewhat tinker with that exact approach, it remains at the heart of most such calculating.

Now, to avoid the real-world complications we’ll get to just a little farther on, let’s assume that all the parks in major-league use have remained the same, and—equally important—each in the same configuration for a good number of years running. (That assumption is so comically wrong that one at once gets an idea of some of the other problems, but let’s make it for now). If we apply the methodology described above, what have we calculated? For each park, we have calculated how it affects, in our running example, run scoring. But, again, compared to what? In fact, we have compared each park to all the other parks. Think about it: no one park is being compared to the same base as any other park. Shea Stadium, to randomly pick one, would be being compared to all parks except Shea Stadium; Dodger Stadium (to randomly pick another park in the same league) would be being compared to all parks except Dodger Stadium. Those “compared to” bases are by no means the same thing. Our resultant “park factors” are, in reality, a pile of reeking garbage.

(Well, maybe that’s a bit of an exaggeration—but very obviously, they are severely defective.)

When we wake up to that defect, we realize that it can be almost wholly corrected for. What we need to do is to multiply the “away” data by one less than the number of teams in the league (that is, by the number of Team X’s opponent teams), add to that Team X’s away per-game datum (that is, Team X plus its opponents), then divide the result by the full number of teams in the league. That way, the basis becomes very nearly all parks in the league, rather than “all parks but the one we’re talking about”. (It’s “very nearly”, not exactly, because we have no data equivalent to Team X’s batters facing Team X’s pitchers in any park, but it does help a lot.)

But the point of that discourse is not to suggest that we can with care get accurate park factors: it is to suggest that too many people have done too little thinking about what they’re really doing when they try to construct “park factors”. In fairness, the concept arose—and was very obviously needed—in a day when ballparks were much less subject to the dizzying pace of modification or outright replacement that is the modern norm, and multi-year per-park data were reasonably stable and could safely be cumulated, and thus—did one take the sorts of care described above—meaningful park factors calculated.

That is only the beginning of the complications. Consider, for example, the idea of using “games” as the normalizer, to get a “per-game” datum. That stinks, for what should be obvious reasons. The best normalizing basis for a given stat will vary with the stat in question. As others have pointed out, what’s wanted as a basis for any given stat is the opportunities for achieving it. For walks, for example, the “opportunity” is all plate appearances, so the normalized walks datum should not be walks per game but walks per plate appearance. (Actually, to be precise one should probably subtract sacrifice bunts from plate appearances for most stats, since the player laying down a sac was ordered to do so, and that plate appearance was thus not an “opportunity” to take a walk.) For home runs, it could be argued that the basis ought to be at-bats minus strikeouts, though it could also be argued that since parks affect both strikeout and walk totals, there is an interdependence there that perhaps ought not to be. (But, again, this is not to try, right here and now, to develop perfect park-effects answers but only to indicate the related questions that need careful review, review that they rarely get.)

(Not everyone has been blind to these problems; there was an enlightening if technical 2007 paper titled “Improving Major League Baseball Park Factor Estimates”, by Acharya, Ahmed, D’Amour, Lu, Morris, Oglevee, Peterson, and Swift, published in the Harvard Sports Analysis Collective. (Regrettably, that paper seems no longer available on line except behind some high pay walls, though you can see the Abstract.) But, though they seem to improve as compared to “the ESPN model”, the paper’s Conclusion noted that:Unfortunately, the lack of longer-term data in Major League Baseball, particularly due to the park relocation undergone by eight National League teams, makes it extraordinarily difficult to assess the true contribution of a ballpark to a team’s offense or defensive strength. While we openly admit this diffculty, we still feel that the ESPN model for Park Factors is inadequate and requires improvement. Its theoretical errors are too significant for the [extent to which] it is currently quoted.Just so. But despite their model’s theoretical improvements over the standard form, the result can never be better than the data, the issue we address next.)

Most of the sorts of issues raised above, however crucial, have become, in the modern era, essentially moot, the reason being the grotesque invalidity of the temporary assumption we made earlier: that all the parks in major-league use have remained the same, and each in the same configuration, for a good number of years running. Statistical data is only valid and useful when it represents some sample size large enough that sheer chance is not likely to have a large effect on the result. If we toss a coin four times and happen to get three heads, we would be something a lot worse than ill-advised to pronounce as a verity that tossed coins come up heads 75% of the time. It is hard nowadays to find a major-league ballpark that does not have some structural change nearly every season, and it is remarkable how much effect even a seemingly minor change can have on certain stats.

Moreover, wholly new ballparks have been coming on line now at a rate of almost one a year for many years running, and that trend continues. Those new parks, of course, each have dead-zero park-factors relations to the ones they replace.

And remember: even if Park X remains unchanged for some years in a row, it is almost 100% sure that some other park—or, more likely, several other parks—whose nature goes into the all-parks basis, will have changed (not infrequently in major ways).

Moreover, with the prevalence of retractable roofs these days, even an “unchanged” park can be a very different place from season to season depending on the number of times the roof is open versus closed in a given year (roofs typically have a huge effect on game stats), not to speak of whether the openings and closing are daytime or night-time. (And some teams open or close the roof in the midst of an ongoing game, sometimes even in the midst of actual play.)

When we consider those sorts of things (not to mention the wildly unequal numbers of games one team may play versus different opponents), it should be clear that attempting “park factor” numbers is a task so complicated as to perhaps be impossible—that is, any result we come up with may have flaws, which we cannot hope to correct for, that render it inaccurate. In the essay linked up top of this section, we examined the reality for one league in a given year, and the extent of the variability of results was staggering. We feel strongly, very strongly, that for now the only practical approach is to concede that various parks have obvious and often substantial effects on performance results, but that corrections for those factors are so unreliable that we are better off to use unadjusted data and make broad-brush mental corrections to the results. (That is, say to ourselves, “Well, sure, but that’s from playing at Coors Field” or suchlike things.) Unpalatable, yes, but less so than the alternative of using nearly meaningless “factors” to make “corrections” that have the capacity to severly mislead.

And with all that, we haven’t even covered all of the problems. Here, from blogger Kincaid at the “3-D Baseball” site, is a sound examination of some of those other isues; it is rewarding reading.

This section is now of interest only for historical analysis and as might relate to discussions of the fictitious, factitious “steroids era”. But we leave it here for those uses. (It is also now likely that most reading this will need to have explained that the term “SillyBall” was a pun on the once-popular Oakland A’s marketing slogan “Billy Ball”, about the brand of managing Billy Martin did for them.)

For a long stretch of years, sixteen years (from 1977 through 1992, inclusive)—a period amounting to nearly a generation—the levels of major-league baseball performance when averaged across an entire league or all of major-league baseball, were quite stable from season to season. There might be an occasional freak year like 1987, but the large-scale totals, which represent the norms against which we judge individual men’s performances, were constant enough that per-season adjustment was not important. Everyone had a pretty good idea what a .300 batting average or what a 3.65 American-League ERA meant about a man’s abilities.

It is now clear that starting sometime in the 1993 season the baseball itself was somehow substantially juiced. That juicing, which created what we call The SillyBall, is demonstrated exhaustively elsewhere on this site (click the link), so here we merely accept it as the fact that it is. Baseball before 1993 (at least back to 1977 anyway, which is when a different, and more resilient, brand of baseball was introduced into the game) and baseball after 1993 right on through today are simply two different, incommensurable games.

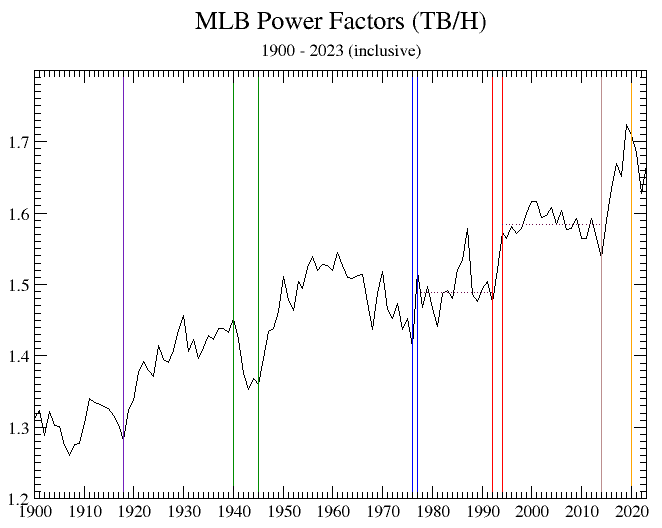

(Since that was first written, we have had further and even more dramatic ball juicings, starting in 2015 or so. We thus insert here a graph from the SillyBall page. The maroon line is the advent of notorious “Rabbit Ball” prompted by Babe Ruth’s popularity; the pale green lines bracket WWII; the purple lines are the introduction of the Rawlings baseball; the red lines are the 1993 juicing; the dark-green line is the apparent 2015 juicing; and the brown line is the who-knows-what (ball deadening?) in 2019. We say no more here.)

For quite a while, we at Owlcroft calculated and applied an adjustment factor to the raw stats, to make them commensurable with a baseline of results from that 16-year window. We did that so that no one looking at, say 1998 numbers for a team or man and comparing them with the sort of “built-in” mental benchmarks of what for so long had been “normal” would be misled by the effects of the SillyBall. But we are now more than two decades on from the change, and today’s observers’ mental benchmarks have now all been conditioned by, or re-set to, the norms of the SillyBall era, and so corrections to a now-outdated era seem worse than useless. So we don’t do them anymore. There are no men left in the game with seasonal data from 1993 or earlier, so we can ignore the pre-SillyBall data with no effect on career results for anyone playing today.

(Pretty obviously, it is now even more ridiculous to think of attempting to adjust stats for the ball juicings: nowadays, it seems every season is a new era in SillyBall-ishness. Thanks, Mr. Manfred.)

If, incidentally, you wonder if “SillyBall” isn’t a needlessly pejorative term, we use it for a reason. That reason is our deeply held belief that there is such a thing as an “ideal” baseball scoring level, and that the SillyBall produces results well above that ideal. On what basis might one speak of an “ideal” scoring level? This: that on the one hand the scoring of a run in a ball game ought to be neither so rare as to make the game boring, and make any given run almost invariably crucial (since a fair proportion of runs are as much luck as skill), but that on the other hand it ought not to be so trivial—so much just another rotation of the turnstile—that it’s ho hum, and let’s just see how many they pile up by the end. A run should be exciting yet not game-controlling. What exactly that translates to in numbers is, of course, somewhat subjective, but our feeling is that a combined game total (both teams, that is) of something close to 8 runs is about right. We don’t want an endless procession of 3-2 and 2-1 games, but neither do we want a parade of 11-7 or 9-2 games.

<rant>If no one will do away with the brain-dead Designated Hitter Rule (known to many as “Clownball”), then “tune” the ball (something MLB is, obviously, pretty good at) till the average per-game combined runs total is somewhere around 8 (4 per team), and leave it there. But it seems that The Lords Of Baseball are hypnotized by the relative market success of football, and apparently have decided that baseball, rather than—as a sane person might think logical for “The National Pastime”—emphasize the things that make it unique and wonderful, should instead attempt to emulate football as much as possible, and pre-eminently by jacking run-scoring to the point where it is often difficult to determine at a glance if a game score in those hideously annoying screen-bottom tickers TV runs is from a football game or a baseball game. (Will basketball-level scoring be the next target?)

Why is the DH “brain-dead”? Because it severely dumbs down the game. A deep part of why baseball became The National Pastime is that it calls for brains as well as brawn: there are strategy and tactics to consider—and fans love (or used to love) to try thinking along with the manager. And one of a manager’s biggest problems was how to handle a struggling pitcher when his turn to bat would come up in the next half inning: put in a reliever for whom you will need to pinch-hit in that next half inning, thus burning a reliever for just a batter or two, or take your chances? No, no: that’s thinking! The motto of the last few Commissioners seems to have been “There’s to be no thinking in baseball!” (Think also about the banning of shifts.) OK, </rant>.

(The recent run-scoring gyrations are most curious. The 2015 ball juicing, which may have been succeeded by more juicing through 2019, caused a dramatic jump in run scoring, peaking in 2019 at almost 9.6 combined R/G. Since then, it has been a bizarre up-and-down see-saw ride: down from 2020 through 2022, then suddenly up in 2023, and then markedly down again in 2024. Heaven knows what’s next.…)

Having come this far on the site, you may be wondering where to look next. If you have been following the sequence of introductory pages set forth on our home page, you have completed the suggested introductory material. You can now return to the home page and review the list of actual results we offer—or instead follow up your reading with the pages listed there at More Useful Information.

Advertisement:

Advertisement:

All content copyright © 2002 - 2025 by The Owlcroft Company.

This web page is strictly compliant with the WHATWG (Web Hypertext Application Technology Working Group) HyperText Markup Language (HTML5) Protocol versionless “Living Standard” and the W3C (World Wide Web Consortium) Cascading Style Sheets (CSS3) Protocol v3 — because we care about interoperability. Click on the logos below to test us!

This page was last modified on Saturday, 26 October 2024, at 6:27 pm Pacific Time.